Andy Paterson • October 23rd, 2025.

When a company receives an upcoming weather forecast, they’ll only use it if they feel confident that they can trust it. Hindcasting builds that trust.

By examining how forecasted weather and climate models performed against actual historic weather conditions and other benchmark predictions, weather prediction modelers can demonstrate that their models are accurate and outperform others.

Skepticism is natural, especially when weather forecasts regularly change, different models disagree with each other, and some models have been proven to be wrong. However, conducting hindcasting for weather and climate models can demonstrate that they are reliable and can be trusted.

This blog will examine what hindcasting is, the importance of trusting your forecasts, how hindcasting is performed, and ClimateAi’s hindcasting performance compared to benchmarks.

Hindcasting means testing a forecasting model by seeing how well it would have predicted past events. In other words, we run the model on historical data to check if its forecasts match what actually happened. Importantly, each run is done as if we were back in that moment – that is, the model can’t “peek” at any data from the future. This makes hindcasting the most reliable way to test whether a forecasting model works and how well it works.

Hindcasting also allows for a model to be compared against benchmark forecasts, such as from other weather forecasters like NOAA, to determine the model’s skill vs. others. Additionally, hindcasting can be used to determine the accuracy over different lead times for specific weather events.

Performing hindcasts builds confidence that forecasts using that model will work in the future and will help forecasters hone their models for improved accuracy.

Accurate weather forecasts can save lives, assets, and critical infrastructure. Or, if you are an agricultural company, it can ensure you maintain high yields and optimize your logistics and supply chains.

Research has suggested that forecasts that are 50% more accurate could save 2,200 US lives a year. And for every 1% improvement in the accuracy of forecast, a weather-sensitive company can increase economic output by 2-3%.

To give an example, ClimateAi accurately forecasted Florida’s very high risk of hurricane impacts in 2022 long before Hurricane Ian formed and impacted the state, providing a roofing company with the information they needed to lock in their supply early and increase their sales by $15 million.

To measure hindcast accuracy, forecasts are assessed against actual historical data. We can find the average gap between what was forecasted and what actually happened, and compare that to benchmarks.

This four-step process, which ClimateAi uses to measure accuracy, helps us build confidence with our users and improve our models.

We take years of historical forecasts, comparing predicted and observed data (temperature, precipitation, yields, etc.) across many locations and multiple lead times, from 1 day to six months ahead.

The main validation metric we often use to assess accuracy is the Mean Absolute Error (MAE), the average gap between what was forecasted and what actually happened, but many other statistical measures of skill are also used at times.

That said, using seasonal forecasts effectively requires looking beyond single accuracy scores. We also recommend examining probabilistic measures, since our forecasts describe a range of possible outcomes rather than one deterministic result. Understanding the spread and likelihood of different scenarios is key to making informed, risk-aware decisions when using subseasonal and seasonal forecasts.

We evaluate accuracy across different time horizons:

This ensures ClimateAi’s forecasts perform consistently across both near-term and long-range planning windows.

Beyond large-scale validation, ClimateAi also conducts event-based hindcasts, for example, testing how well models predicted frost or rainfall 3, 7, or 9 days before the event. These targeted evaluations help fine-tune regional performance and support customer-specific adaptation use cases.

Our platform is built to localize and calibrate forecasts at a granularity of 1km x 1km.

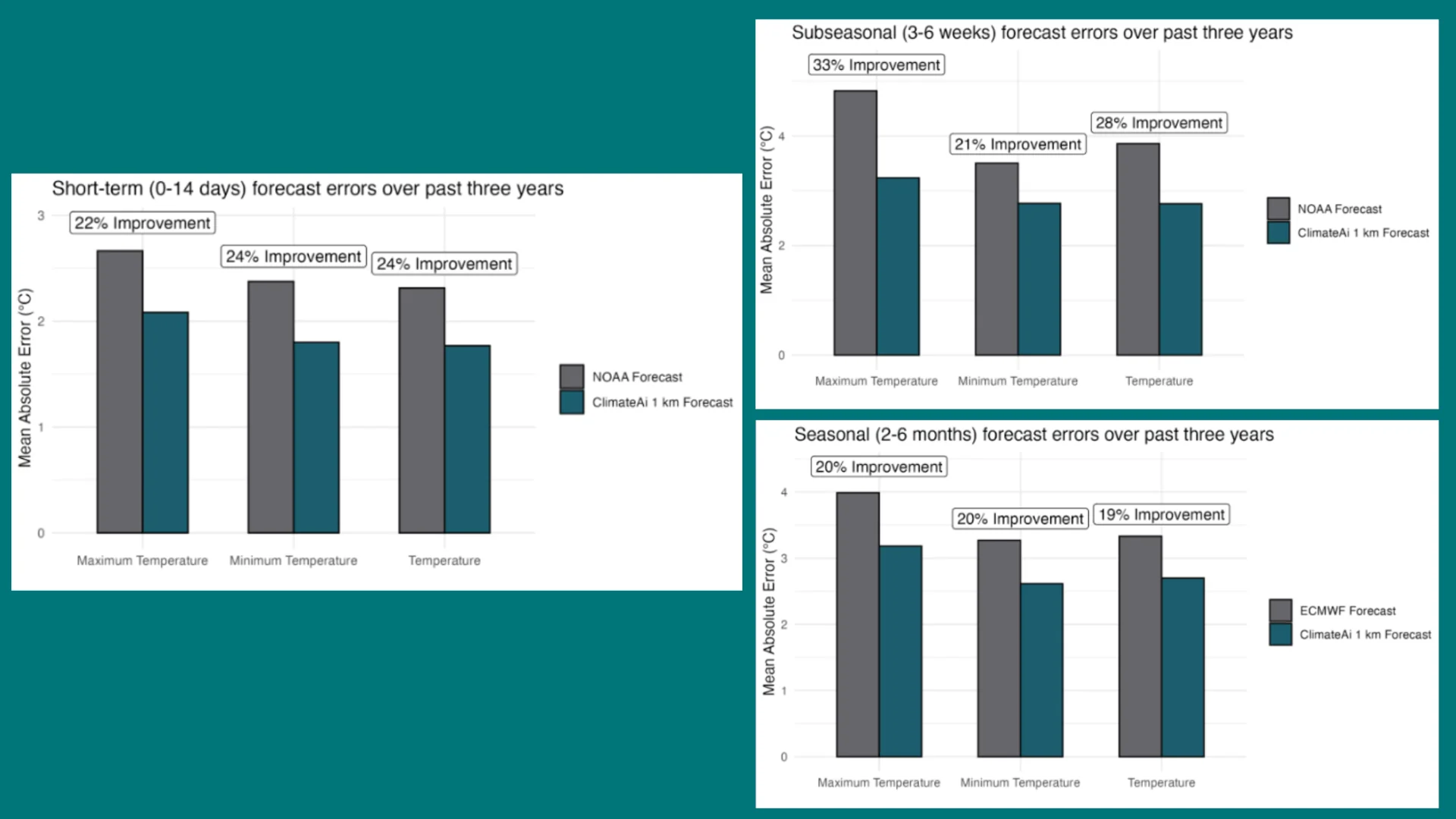

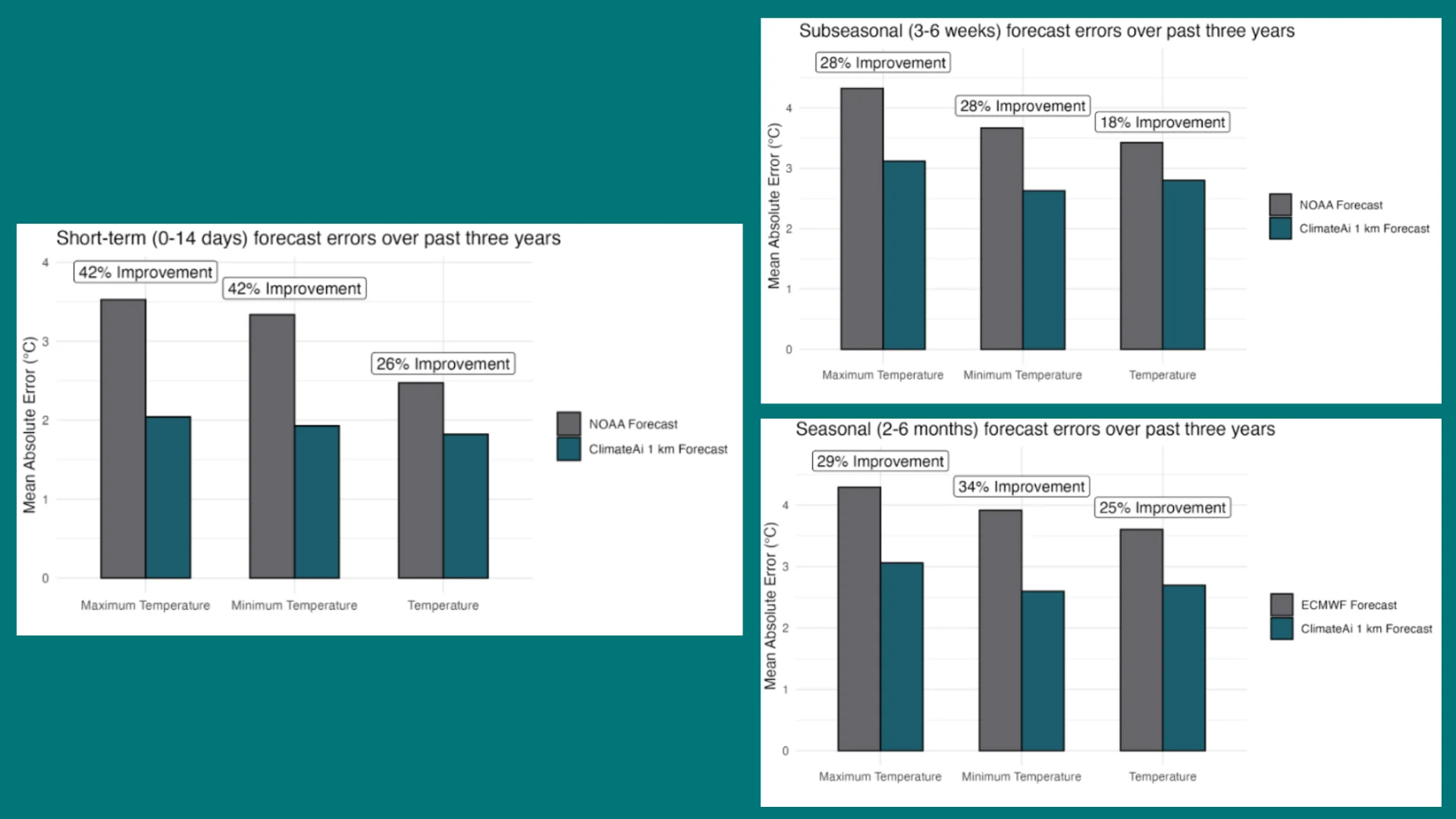

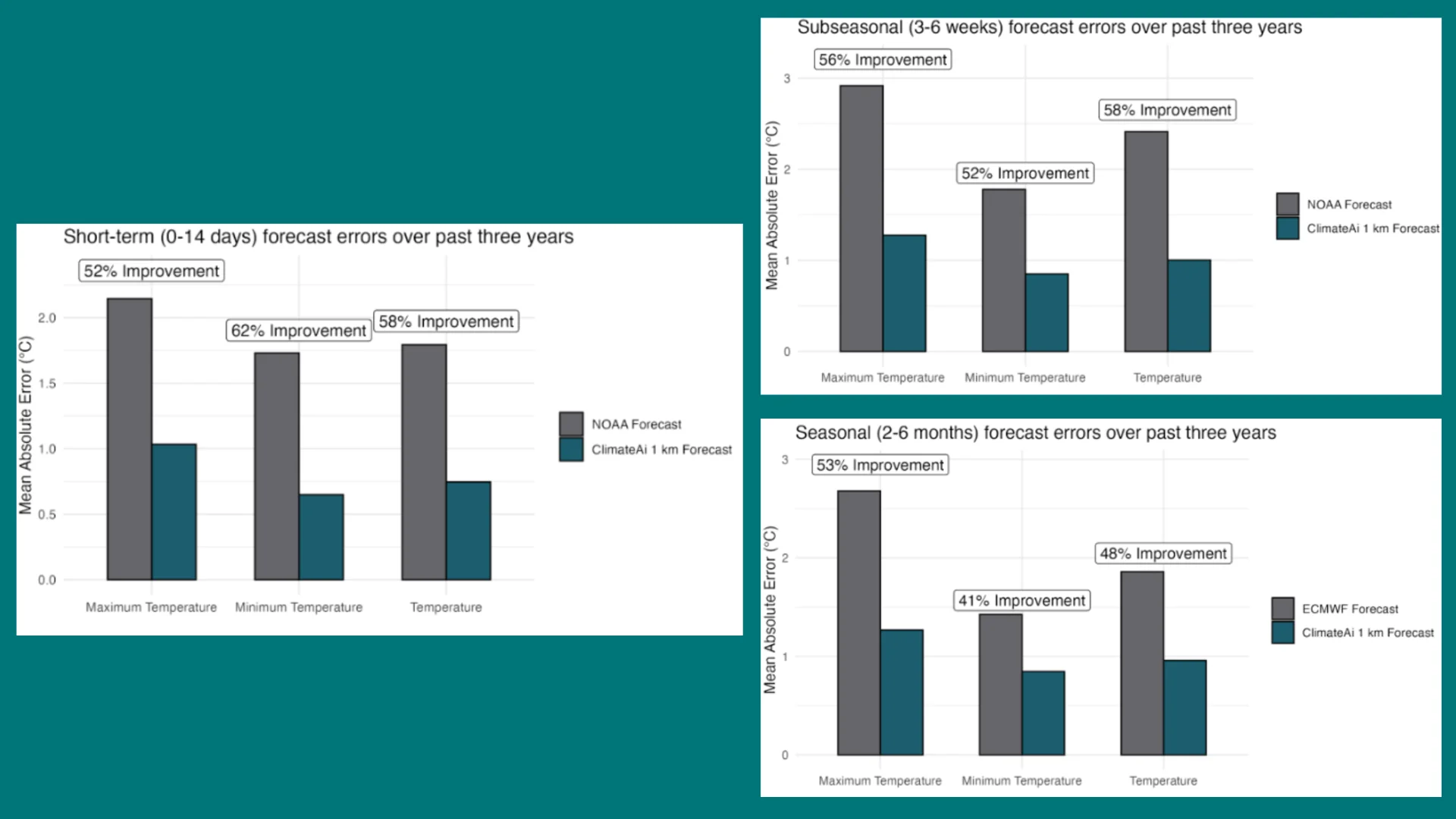

These three examples demonstrate how we consistently outperform benchmarks in temperature forecasts across various geographies and lead times.

Crops Grown In This Region: Wheat, Barley, Oats, Sugar Beets

In one of Europe’s most climate-sensitive grain growing regions, ClimateAi performed between 19–33% in mean forecast error across short-term (1–14 day), subseasonal (3–6 week), and seasonal (2–6 month) horizons against the European Center for Medium-Range Weather Forecasts (ECMWF) and NOAA’s forecasts.

Result: More reliable temperature projections help farmers and buyers align planting, harvesting, and procurement around shifting seasonal windows.

Crops Grown in This Region: Pistachios, Almonds, Berries, Grapes, Tomatoes

In the US West, where drought and heat volatility significantly impact agricultural yields and risks, ClimateAi’s 1 km model achieved 18–42% lower error than NOAA and ECMWF forecasts across lead times of 1 day to 6 months.

Result: Our models more accurately capture the long-term temperature and precipitation likelihood critical for irrigation, water management, and planting and harvesting timing.

Crops: Coconut, Sugarcane, Rice, Cassava, Corn

The tropics can be tough regions to predict accurately. Yet ClimateAi delivered 40–62 % improvement over NOAA and ECMWF baselines across all timescales.

Result: Strong skill in monsoon-driven regions enables better timing for input (fertilizers, pesticides), harvesting, and export logistics, saving yields and costs.

We are proud of the accuracy of our models, knowing our lower Mean Absolute Error (MAE) will give companies more confidence to make time-critical decisions. Having said that, our main value proposition is in tying these forecasts to agricultural, hydrological, and other supply chain or operational data to give insights to avoid losses and drive business value.

Our solution offers immediate visibility into volatility across your entire supply chain, with dynamic decision tools, alerts, and data-sharing functionalities that keep you a step ahead of potential risks. Here is how our accurate models have helped businesses drive returns and exploit opportunities:

Now, our recently released AI agent will enable users to query our accurate forecasts and easily interpret them to support data-driven decisions.

Inaccurate weather forecasts are increasingly measured in lives, yield, and assets lost. Hindcasting enables forecasters to demonstrate that their models can be trusted to make informed decisions.

Accuracy in forecasting underpins the quality of climate adaptation strategies, the potential ROI of those adaptations, and how resilient a company is.

To see how ClimateAi’s models can help you build a resilient business model and how we perform for your crop or region. Get a demo.

You can request a custom hindcast validation demo through our platform. This shows how your specific crop or geography performs relative to historical weather and NOAA or ECMWF benchmarks.

Andy Paterson is a content creator and strategist at ClimateAi. Before joining the team, he was a content leader at various climate and sustainability start-ups and enterprises.

Andy has held writing, content strategy, and editing roles at BCG, Persefoni, and Good.Lab. He has helped build one of the industry’s most popular newsletters and regularly publishes environmental science articles with Research Publishing.