ALL NEW FOUNDATIONAL INTELLIGENCE MODEL (FICE) - Get your Insights Report Here

Alex Luna • July 27th, 2020.

By Ankur Mahesh, AI Engineer at ClimateAi

During the seventeenth century, two schools of thought characterized the philosophy of science: deductive reasoning (promoted by Rene Descartes) and inductive reasoning (promoted by Sir Frances Bacon). According to Sal Khan, “deductive reasoning is taking some set of facts and using that to come up with some other set of facts. Inductive reasoning is looking for a trend or for a pattern, and then generalizing.” Today, the distinction between these two scientific regimes manifests itself in a climate science problem: seasonal forecasting.

Seasonal forecasting is the task of predicting a climate variable, such as temperature, two weeks to one year ahead of time. Currently, the European Center for Medium-range Weather Forecasting (ECMWF) and the National Oceanic and Atmospheric Administration (NOAA) use dynamical forecasting models, an example of deductive thinking. These dynamical models start from a set of facts:

1. the initial state of the atmosphere and ocean

2. the physical equations governing their behavior

3. external influences on the climate (such as greenhouse gas emissions)

4. probabilistic models to account for uncertainty in (1), (2), and (3) and limited computational power

They use this physical information to arrive at another set of facts: a future forecast of the climate.

Contrarily, at ClimateAi, we use deep learning, a form of inductive reasoning, for seasonal forecasting. Deep learning models are called neural networks, and under this paradigm, a neural network is shown many examples of the predictor (the initial climate state) and the predictand (the future climate state). From these examples, the model uses an optimization algorithm to learn a pattern between the predictor and predictand. Crucially, no physical equations are directly encoded in a neural network; therefore, it is sometimes referred to as a black box.

Many stakeholders in climate-sensitive sectors hesitate to trust a “black-box” algorithm. Therefore, an important question is which school of thought offers more interpretable seasonal forecasts?

Dr. Zachary Lipton, a professor at Carnegie Mellon University, isolates two types of interpretability: “transparency (‘How does the model work?’) and post-hoc explanation (‘What else can the model tell me?’)” (Lipton, 2016).

From the lens of transparency, dynamical models are more interpretable. Each component of a dynamical model is solving physical equations governing the movement of the atmosphere and the ocean. In contrast, the exact purpose and behavior of each component of a neural network are unclear and often uninterpretable.

However, from the lens of post-hoc explanation, neural networks are promising and offer certain advantages over dynamical models. Neural networks can be used to generate “saliency maps,” which indicate the most important pixels in an image for the neural network’s prediction. In climate datasets, the “image” is a global grid of a specific variable, such as temperature. In climate science, saliency maps are uniquely informative because, in every image, each pixel refers to a specific location, given by latitude and longitude. This allows for a saliency map to be averaged across multiple years. Below is the average of 20 years of saliency maps for ClimateAi’s neural network that forecasts El Niño.

In the saliency map, the gray to white shading indicates how important a region is to the neural network prediction, and the purple to yellow shading indicates how closely tied a region is to El Niño (a metric called R2). From this saliency map, we can interpret the networks’ behavior; we can conclude that the network bases its forecasts on activity in the Pacific Ocean, specifically in regions that have a high R2 with El Nino. This is in line with what we would expect, as El Niño is a phenomenon of warm and cold temperatures in the equatorial Pacific. These post-hoc visualizations are a unique benefit of neural networks. After dynamical models have been run, they offer no built-in way to generate a saliency map.

Despite their differences, the interpretability of dynamical models and machine learning have an important similarity. Neither method can be decomposed into specific El Niño simulators, and Dr. Lipton identifies decomposability as a form of transparency. In a dynamical model, El Niño emerges as a result of the overall interactions between the atmosphere and ocean components, but there is no specific module or code that directly simulates it (Guilyardi, 2017). (This property of El Niño is called emergence.) Likewise, in a neural network, there is no specific layer or filter that can be physically interpreted as the El Niño simulator; the El Niño forecast emerges from the interaction of the weights and layers as a whole.

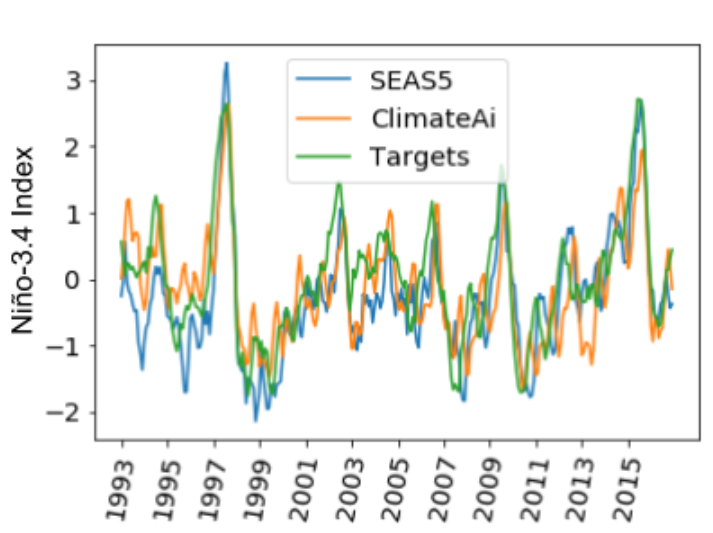

Both neural networks and dynamical models have emergent properties which are not strictly interpretable or decomposable, so we must validate their underlying systems. ClimateAi builds trust in neural networks similar to how ECMWF builds trust in its dynamical models: we compare ClimateAi’s neural network forecasts, the ECMWF’s dynamical models’ forecasts (labeled in the graph below as “SEAS5”), and the true “Target” state of El Niño.

At ClimateAi, we are actively researching ways to develop transparent methods of seasonal forecasting. By dissecting the strengths and weaknesses of deep learning and dynamical models, we are excited to view this task from the lens of an age-old contrast in the philosophy of science. In order to develop the most reliable and interpretable seasonal forecasts, we hope to leverage both deductive and inductive reasoning.

Acknowledgements

I’d like to thank Dr. V. Balaji and Dr. Travis O’Brien for their helpful discussions regarding this topic. I’d also like to thank Max Evans, Garima Jain, Mattias Castillo, Aranildo Lima, Brent Lunghino, Himanshu Gupta, Dr. Carlos Gaitan, Jarrett Hunt, Omeed Tavasoli, and Dr. Patrick T. Brown for their collaboration on our project.

References

Guilyardi, Eric. “Challenges with ENSO in Today’s Climate Models.” National Oceanic and Atmospheric Administration (2015). Available here.

Khan, Salman. “Inductive & deductive reasoning.” Khanacademy.com. Available here.

Lipton, Zachary C. “The mythos of model interpretability.” arXiv preprint arXiv:1606.03490 (2016).